ChatGDT — Chatting with Graph-based Digital Twins

Part 1: The Challenge of Natural Language Interfaces for Knowledge Graphs

This post was originally written in June 2023, and the research for this project started in the beginning of 2023, when GPT-3.5-turbo was state-of-the-art. While technology has evolved significantly since then, the core insights and methodologies remain valuable.

Introduction

Let’s say you have a question and a database that contains all the information you need to answer it, but no idea how to query it.

Since 2020, I’ve been working as a data engineer in the construction industry, modeling a digital twin using a knowledge graph expressed in RDF facts. A digital twin is a comprehensive digital representation of physical entities, capturing various aspects of their structure, systems, and behavior. It can act as a central database of information that can be queried using SPARQL.

In order to make a graph-based digital twin available to external parties I went on a journey to find a translator that can take natural language queries as input, search the graph, and convert the results back into natural language.

What You’ll Learn From This Series

By reading this series, you’ll discover:

- The practical challenges of connecting knowledge graphs with LLMs and how to approach them

- Why simpler prompting strategies sometimes outperform complex ones (a counterintuitive finding that challenged my assumptions)

- How to systematically test and evaluate different prompting techniques

- Techniques for iteratively exploring knowledge graphs using LLMs

- Insights about how LLMs reason with structured information

- How more recent advances like constrained decoding might improve these approaches

Whether you’re working with knowledge graphs, building interfaces for complex systems, or just curious about practical LLM applications beyond standard chatbots, this series offers both technical details and real-world lessons from my journey of trial and error.

Technical Foundation

Before diving into my experiments, it’s helpful to understand the basic building blocks of what we’re working with. This section covers the key concepts and tools that form the foundation of this exploration.

Knowledge Graphs and RDF

Knowledge graphs represent information as interconnected entities and relationships. They model the world as a network of facts, expressing each fact as a triple: subject, predicate, and object. This structure is intrinsically more machine-readable than natural language while preserving semantic meaning.

The Resource Description Framework (RDF) provides a standard model for expressing these facts. In RDF, each component of a triple can be:

- A URI (Uniform Resource Identifier) representing an entity or relationship

- A literal value (like a string or number)

- A blank node (representing an unnamed resource, though I deliberately avoided these in my examples)

For example, a simple fact like “Alice knows Bob” would be expressed as:

ex:alice foaf:knows ex:bob .

Where ex: and foaf: are namespace prefixes that expand to full URIs.

FOAF Ontology

For my experiments, I used the Friend of a Friend (FOAF) ontology, which is a simple vocabulary for describing people and their relationships. FOAF includes terms like:

foaf:Person- A class representing a personfoaf:name- A property for a person’s namefoaf:knows- A relationship between two peoplefoaf:age- A property for a person’s age

I extended this with some additional relationship types (using the rel: prefix) to create more complex scenarios:

rel:siblingOf- A relationship between siblingsrel:childOf- A relationship between a child and parentrel:employedBy- A relationship between an employee and employerrel:employerOf- Inverse ofrel:employedBy

SPARQL

SPARQL (pronounced “sparkle”) is the query language for RDF graphs. It allows you to search for patterns in the graph and retrieve matching data. A basic SPARQL query looks like:

PREFIX foaf: <http://xmlns.com/foaf/0.1/>

PREFIX ex: <https://example.com/people#>

SELECT ?name WHERE {

ex:alice foaf:knows ?person .

?person foaf:name ?name .

}

This query would return the names of all people that Alice knows.

Large Language Models and Their Capabilities

When ChatGPT was released in late 2022, I realized that large language models (LLMs) were becoming accessible tools for anyone, even non-experts, because they understand natural language. Better yet, these models can work with multiple languages and translate between them by comprehending the meaning of entire sentences and paragraphs. And importantly, “language” here isn’t limited to human languages but includes artificial ones like programming and query languages.

The LLM I used for most of my experiments was GPT-3.5-turbo (the model powering ChatGPT at the time of writing the original post in July 2023). These models have several capabilities that make them potentially useful for knowledge graph interaction:

- Natural language understanding: They can parse and comprehend human questions, even when ambiguous.

- Code generation: They can generate structured code, including SPARQL queries.

- In-context learning: They can adapt to new information provided in the prompt without additional training.

- Reasoning: They can follow chains of relationships to draw conclusions from facts.

However, they also have limitations:

- Context window: They have a limited context window (4,000 tokens for GPT-3.5-turbo at that time), which restricts how much of the knowledge graph can be provided at once.

- Hallucinations: When uncertain, they can generate plausible but incorrect information, which is particularly problematic when generating structured queries.

- Consistency: When choosing a temperature above 0, their performance can vary between runs, even with identical prompts.

Note: As of 2025, newer models have significantly larger context windows and gained the ability to do explicit reasoning. However, the core challenge of hallucinations persists, particularly for knowledge-intensive tasks.

Test Dataset

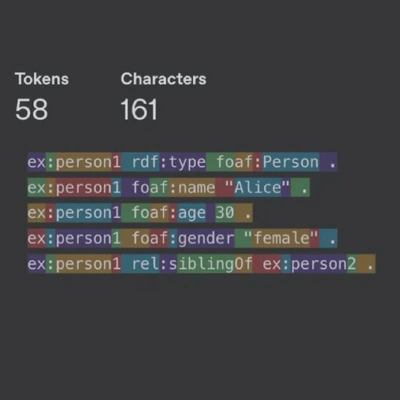

I created a small knowledge graph for testing with fictional people and their relationships. The graph was intentionally designed to test different inference capabilities:

ex:person1 rdf:type foaf:Person .

ex:person1 foaf:name "Alice" .

ex:person1 foaf:age 30 .

ex:person1 foaf:gender "female" .

ex:person1 rel:siblingOf ex:person2 .

ex:person1 rel:siblingOf ex:person3 .

ex:person2 rdf:type foaf:Person .

ex:person2 foaf:name "Bob" .

ex:person2 foaf:age 35 .

ex:person2 foaf:gender "male" .

ex:person2 rel:siblingOf ex:person1 .

ex:person2 rel:siblingOf ex:person3 .

ex:person3 rdf:type foaf:Person .

ex:person3 foaf:name "Eve" .

ex:person3 foaf:age 28 .

ex:person3 foaf:gender "female" .

ex:person3 rel:siblingOf ex:person1 .

ex:person3 rel:siblingOf ex:person2 .

# ...

Note: I deliberately used generic identifiers (ex:person1, ex:person2) in my test dataset rather than identifiers that include names (ex:alice, ex:bob). This was intentionally done to prevent the LLM from making assumptions based on the entity IDs. In some early experiments, I found that when using name-based identifiers, the model would sometimes correctly answer questions by pattern matching on the identifiers rather than properly traversing the relationships in the graph. Using generic identifiers ensured that the model could not cheat but had to actually understand and follow the relationships expressed in the triples.

This graph includes direct relationships (like Alice being a child of Person4) and implicit relationships that require inference (like determining that Person2 is Alice’s brother because they share a parent).

By designing questions that required traversing multiple relationships (like “Who is the mother of the person that the colleague of Alice’s brother lives with?”), I could test the limits of LLM-based querying approaches.

LLM Integration Tools

Two main tools were used in these experiments:

- LlamaIndex: A data framework for connecting custom data sources to LLMs, which includes vector store capabilities for retrieval.

- LangChain: A framework for creating LLM-powered applications, which includes tools for prompting, agents, and custom tool creation.

These tools provide different approaches to the same fundamental challenge: how to effectively connect structured data with the natural language capabilities of LLMs.

Initial Approaches

My journey to connect LLMs with knowledge graphs wasn’t straightforward. I explored several approaches before finding something that actually worked. Let me walk you through what I tried, where I failed, and what I learned along the way.

Using LlamaIndex

My colleague Maqbool showed me a small prototype he had built over the weekend using LlamaIndex that could report knowledge in certain cases when the information was presented in a very simple format.

His prototype followed a simple yet effective approach: import the RDF graph into LlamaIndex’s VectorStore and query it with unprocessed user questions. The vector store would retrieve relevant triples based on embedding similarity and send these as context to the LLM, which would then generate an answer based on this limited view on the graph.

Results and Analysis

This approach worked surprisingly well for small graphs and simple questions like “What is the age difference between Alice and Bob?” The LLM could use in-context learning to reason over the facts provided. For example, with these RDF triples:

ex:person1 rdf:type foaf:Person .

ex:person1 foaf:name "Alice" .

ex:person1 foaf:age 30 .

ex:person1 foaf:gender "female" .

ex:person1 rel:siblingOf ex:person2 .

ex:person2 rdf:type foaf:Person .

ex:person2 foaf:name "Bob" .

ex:person2 foaf:age 35 .

ex:person2 foaf:gender "male" .

ex:person2 rel:siblingOf ex:person1 .

The model could understand the entities and correctly answer: “The age difference between Alice and Bob is 5 years. Alice is 30, Bob is 35 years old.”

As long as the entire graph fits within the prompt, the model can answer simple questions correctly. However, larger graphs wouldn’t fit within the 4,000 token context window of GPT-3.

To give some perspective, this very short description of Alice already used 58 tokens that are competing for space with further instructions and reasoning. A graph of 60 people would barely fit into a 4,000 token context window, leaving almost no room for the actual inference.

The solution of storing the facts in a VectorStore and only retrieving the relevant facts to overcome this limitation is a first step, but retrieval based on a simple query imposes the risk of missing the relevant facts. Let me give you an example:

Let’s say we have a graph with facts of a million people, and Alice and Bob are not located close together but far apart:

ex:person1 rdf:type foaf:Person .

ex:person1 foaf:name "Alice" .

ex:person1 foaf:age 30 .

ex:person1 foaf:gender "female" .

ex:person1 rel:siblingOf ex:person546398 .

# ... ex:person2 to ex:person546397

ex:person546398 rdf:type foaf:Person .

ex:person546398 foaf:name "Bob" .

ex:person546398 foaf:age 35 .

ex:person546398 foaf:gender "male" .

ex:person546398 rel:siblingOf ex:person1 .

# ... ex:person546400 to ex:person1000000

When querying the VectorStore with slightly more complex questions, such as “What is the age difference between Alice and her brother?”, the vector search might retrieve the fact ex:person1 foaf:name "Alice" . because the name “Alice” is directly mentioned in the query. Depending on the embedding model, it might even return some relationships of the form ex:personX rel:siblingOf ex:personY . , since the word “brother” in the query hints at siblings being relevant. Though it is questionable if facts like ex:personX foaf:gender "male" . would be picked up just because the word “brother” refers to male individuals. Therefore, with a million people in the graph, it is almost certain that no facts about the specific individual ex:person546398 (Bob) would be retrieved, as vector search is not capable enough to determine that “Bob” is the particular sibling we’re looking for. For this, we need to do some form of reasoning as part of the retrieval process.

The model would admit insufficient information at best when given an insufficient subset of facts. However, due to the aforementioned problem of hallucinations, it might confidently produce an answer like “The age difference between Alice and her brother is 3 years” without any factual basis.

Key Insight

I learned something important: the model could perform complex inference if all the necessary knowledge was available. Determining that Alice was Bob’s sibling and that Bob, being male, was Alice’s brother might seem trivial to humans, but it represented genuine logical reasoning for the model.

Finding Alice’s brother is intuitive for a human looking at a graph: we start with Alice and follow the most promising relationships. But programming this flexibility is challenging. What if the relationship was labeled ex:hasBrother instead of rel:siblingOf? What if siblings weren’t directly connected but shared common parents? The complexity grows quickly.

Learnings

I realized I needed to retrieve a small but complete subgraph containing all facts relevant to answering a question. As long as this subgraph fits into the context window, the LLM could reason over it effectively. Unfortunately, as described before, a simple VectorStore using embeddings wasn’t sophisticated enough to retrieve the right subgraph for slightly advanced queries.

Using LangChain

My next attempt involved LangChain, which offers prompting chains, agents, and custom tools. I created a sparql_query tool that accepted valid SPARQL queries as input and returned results as observations for the model.

While this sounded promising, LangChain’s prompts were quite verbose, and the parsing of responses was inconsistent. I spent too much time trying to inject additional instructions into LangChain prompts, eventually deciding it would be easier to craft my own from scratch:

=== PROMPT ===

### Situation

You are a knowledge graph expert. Your high-level objective is to answer the following question given by a non-expert user: "Who is Alice's brother?"

### Tools

split_question: You can split the question into a list of subquestions. Arguments:

- [<list of subquestions>]

vector_store: You have access to a vector store, which you can query with natural language questions to gain some insights that help you understand the structure of relevant parts of the graph. Arguments:

- <natural language question>

sparql_query: You can query the graph by writing lines of SPARQL that will be inserted into a template. Arguments:

- `<SPARQL query>`

### Task

You have to decide what to do next.=

### Knowledge

=== RESPONSE FORMAT ===

### Thoughts

<your thoughts about the current state of knowledge and progress in the strategy>

### Progress

<Estimate progress in percent>%. <Message with how you would formulate the final answer with your findings so far; add as much concrete information as possible>

### Next Step

<Which tool would you use next and why?>

### Tool Selection

<split_question|vector_store|sparql_query> <arguments>

### Final Remarks

<Anything else you want to say to the user>

=== END ===

This approach was better, especially since I could easily modify the prompt. I was expecting the model to write queries like:

SELECT ?age_alice ?age_brother WHERE {

?alice rdf:type foaf:Person ;

foaf:name "Alice" ;

foaf:age ?age_alice ;

rel:siblingOf ?brother .

?brother rdf:type foaf:Person ;

foaf:age ?age_brother ;

foaf:gender "male" ;

rel:siblingOf ?alice .

}

Maybe even add an additional line to calculate the age difference, like BIND( ?age_diff = ?age_alice - ?age_brother ). And sometimes it actually did, or nearly did. If you have ever tried to execute code written by an LLM, you have probably experienced that it’s often not working on the first try. Unfortunately, an empty response will be returned if the query is syntactically correct but semantically wrong. Therefore, the model can’t differentiate if it made a mistake or the information it was querying for does not exist in the graph. Consequently, it will simply assume that the graph does not contain the information required to answer the question. And it happens too often that the model simply tries to use a predicate that doesn’t exist, such as rel:sibling (as opposed to rel:siblingOf). While it is clear what the intention is for an expert user, a non-expert will likely not be able to guide the model in the right direction to fix the SPARQL query.

UPDATE (2025): Newer approaches using constrained decoding could potentially solve the specific issue of using, e.g., predicates that don’t exist. This technique restricts the model to generating SPARQL queries using predicates that actually exist in the ontology. By filtering the model’s output to only allow valid predicates like rel:siblingOf and preventing invalid ones like rel:sibling, we could guarantee syntactically valid queries against our specific knowledge graph. While this wasn’t available during my original experiments, it represents a promising direction for improving reliability in LLM-to-knowledge-graph interfaces.

However, these were not the only hallucinations. Sometimes, actually, quite often, the model simply started to assume names of entities, e.g., Bob (which I must admit was a good guess) or John for Alice’s brother. Though, more unoriginal names, such as Alice's brother, were also assumed. It is highly unlikely that this would be the name of the entity in question, but the model does not consider this. In the end, it just generates more or less random text, which surprisingly often generates results that are good enough for a human that can easily do the needed interpretation on their end but, unfortunately, not good enough for a computer program such as a querying engine.

Iterative Query Building

My next idea mimicked how humans typically explore knowledge graphs. When I write queries, I start with something simple to get a feel for the structure of the graph, such as:

=== PROMPT ===

### System Message

You are an expert in exploring RDF knowledge graphs using SPARQL. Your high-level objective is to answer the following question: "What is the age difference between Alice and her brother?".

### Capabilities

You can query the graph by writing lines of SPARQL that will be inserted into the following template:

"""

prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#>

prefix foaf: <http://xmlns.com/foaf/0.1/>

prefix rel: <http://purl.org/vocab/relationship/>

prefix ex: <https://example.com/people#>

SELECT * WHERE {

{INPUT_LINES_INSERTION}

}

"""

The query will be executed, and the results will be returned to you. You only have to concern yourself with modifying lines in the WHERE clause.

### History

You can learn from this history of previous attempts and their respective results:

{get_previous_queries_and_results()}

### Task

You should iteratively improve the query step by step. It will be executed, and its results will be available in the history in the next iteration. This is the current query and the results it returned:

{get_last_working_query_and_results()}

### Strategy

First, write out your thoughts. Does the current query yield results that will help you answer the question with some improvements? Is it going in the right direction when considering the results of previous queries? If not, what has to be changed? If yes, what can be improved to get closer to your goal? Consider previous queries so that you don't try things again that did not yield results previously. You are done when you think you have enough knowledge to answer the question.

To be able to iteratively improve the query, you should only do one of the following things: add a line, remove a line, or change a line.

=== RESPONSE FORMAT ===

### Thoughts

<your thoughts about the current query and its results. Does it provide **all** needed information, or how would you improve it?>

### Are we done?

<yes|no>

### Current state of knowledge

<Message with how you would formulate the final answer with your findings so far; add as much concrete information as possible>

If not done, come up with 3 different ideas on how to improve the query.

### Idea <1/2/3>

<your idea>

Select the idea that you believe is the simplest yet most effective. Explain your reasoning for this choice and describe the modifications you would make to the query. In order to provide you with more detailed feedback from the execution, each line will be added one after the other, and the partial query will be executed. Every execution with a non-empty result set will be added to the provided history.

First, write down step-by-step what you want to achieve with each line added and order the lines in a way that allows you to gain the most insights about the graph with each execution, as mentioned before. Also, add your reasoning for the order of each step.

### Step-by-step description

a. <First step> (<reasoning why this step should be first>)

b. <Second step> (<reasoning why this step should be second>)

...

Remember to end each line with a `.`.

### Modifications

[

{{"line": <line number>, "action": "<add>", "text": "<new text> ."}},

{{"line": <line number>, "action": "<edit>", "text": "<modified text>. "}},

{{"line": <line number>, "action": "<delete>", "text": "<text to be deleted> . "}},

...

]

=== END ===

At the placeholders for history and previous queries ({get_previous_queries_and_results()} and {get_last_working_query_and_results()}), I’d insert the actual history of queries and results. My idea was to provide all the information needed for the model to understand how I wanted to collaborate.

This complex approach was token-intensive, but after many attempts, it sometimes managed to find Alice’s brother. However, it tended to overcomplicate things, such as using SPARQL as a calculator to calculate simple age differences in very complex ways or retrieving unnecessary information. It wasn’t efficient or robust, but it was the first of all tried approaches so far that produced somewhat useful results.

I considered building on this foundation, letting the model gradually gain knowledge about the graph until it felt confident to answer. However, the whole system fell apart once I introduced more complex queries. The model started running in circles, creating increasingly complex queries, effectively wandering aimlessly hoping to stumble upon relevant information. Realistically speaking, I wasn’t getting any closer to a viable solution. So, I was back to square one.

What’s Next

After multiple attempts with sophisticated prompts and complex query strategies, I was still stuck without a reliable solution. The approaches I’d tried so far all had fundamental limitations: either they couldn’t handle large graphs, generated hallucinated information, or simply wandered aimlessly without finding the correct answer.

But I wasn’t ready to give up. I realized I needed to approach the problem in a completely different way. If complex prompting strategies weren’t working, perhaps I should try something radically simpler? How about creating a systematic testing approach with incrementally more complex questions so that I could get a comprehensive understanding of the model’s capabilities and limitations?

In Part 2, I’ll share how I developed a rigorous testing methodology that led to a surprising discovery: sometimes, the most straightforward approach is the most effective. I’ll reveal how my most sophisticated prompting techniques were consistently outperformed by something far more basic and how this counterintuitive finding completely changed my approach to connecting LLMs with knowledge graphs.

I’ll also share my process for creating a systematic testing framework that allowed me to measure performance across different prompting strategies with varying question complexity. This data-driven approach led to better results and revealed interesting insights about how LLMs actually reason with structured knowledge.